By George Trujillo, Principal Data Strategist, DataStax

Innovation is driven by the ease and agility of working with data. Increasing ROI for the business requires a strategic understanding of — and the ability to clearly identify — where and how organizations win with data. It’s the only way to drive a strategy to execute at a high level, with speed and scale, and spread that success to other parts of the organization. Here, I’ll highlight the where and why of these important “data integration points” that are key determinants of success in an organization’s data and analytics strategy.

A sea of complexity

For years, data ecosystems have gotten more complex due to discrete (and not necessarily strategic) data-platform decisions aimed at addressing new projects, use cases, or initiatives. Layering technology on the overall data architecture introduces more complexity. Today, data architecture challenges and integration complexity impact the speed of innovation, data quality, data security, data governance, and just about anything important around generating value from data. For most organizations, if this complexity isn’t addressed, business outcomes will be diluted.

Increasing data volumes and velocity can reduce the speed that teams make additions or changes to the analytical data structures at data integration points — where data is correlated from multiple different sources into high-value business assets. For real-time decision-making use cases, these can be in a memory or database cache. For data warehouses, it can be a wide column analytical table.

Many companies reach a point where the rate of complexity exceeds the ability of data engineers and architects to support the data change management speed required for the business. Business analysts and data scientists put less trust in the data as data, process, and model drift increases across the different technology teams at integration points. The technical debt keeps increasing and everything around working with data gets harder. The cloud doesn’t necessarily solve this complexity — it’s a data problem, not an on-premise versus cloud problem.

Reducing complexity is particularly important as building new customer experiences; gaining 360-degree views of customers; and decisioning for mobile apps, IoT, and augmented reality are all accelerating the movement of real-time data to the center of data management and cloud strategy — and impacting the bottom line. New research has found that 71% of organizations link revenue growth to real-time data (continuous data in motion, like data from clickstreams and intelligent IoT devices or social media).

Waves of change

There are waves of change rippling across data architectures to help harness and leverage data for real results. Over 80% of new data is unstructured, which has helped to bring NoSQL databases to the forefront of database strategy. The increasing popularity of the data mesh concept highlights the fact that lines of business need to be more empowered with data. Data fabrics are picking up momentum to improve analytics across different analytical platforms. All this change requires technology leadership to refocus vision and strategy. The place to start is by looking at real-time data, as this is becoming the central data pipeline for an enterprise data ecosystem.

There’s a new concept that brings unity and synergy to applications, streaming technologies, databases, and cloud capabilities in a cloud-native architecture; we call this the “real-time data cloud.” It’s the foundational architecture and data integration capability for high-value data products. Data and cloud strategy must align. High-value data products can have board-level KPIs and metrics associated with them. The speed of managing change of real-time data structures for analytics will determine industry leaders as these capabilities will define the customer experience.

Making the right data platform decisions

An important first step in making the right technology decisions for a real-time data cloud is to understand the capabilities and characteristics required of data platforms to execute an organization’s business operating model and road map. Delivering business value should be the foundation of a real-time data cloud platform; the ability to demonstrate to business leaders exactly how a data ecosystem will drive business value is critical. It also must deliver any data, of any type, at scale, in a way that development teams can easily take advantage of to build new applications.

The article What Stands Between IT and Business Success highlights the importance of moving away from a siloed perspective and focusing on optimizing how data flows through a data ecosystem. Let’s look at this from an analytics perspective.

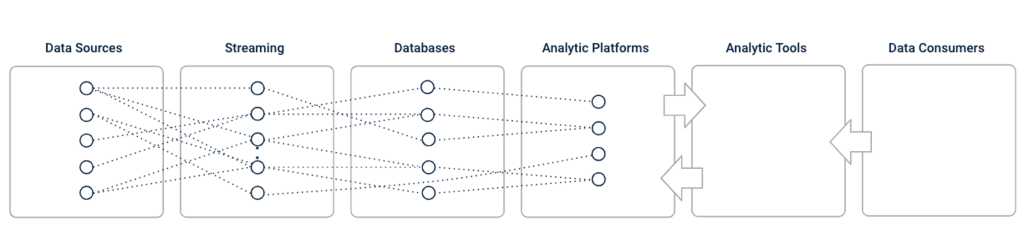

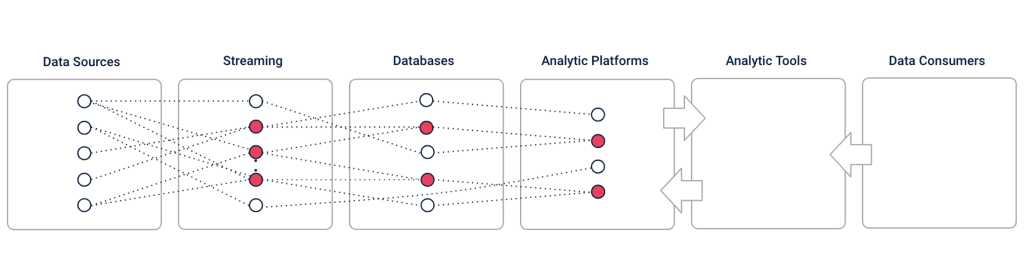

Data should flow through an ecosystem as freely as possible, from data sources to ingestion platforms to databases and analytic platforms. Data or derivatives of the data can also flow back into the data ecosystem. Data consumers (analytics teams and developers, for example) then generate insights and business value from analytics, machine learning, and AI. A data ecosystem needs to streamline the data flows, reduce complexity, and make it easier for the business and development teams to work with the data in the ecosystem.

DataStax

IDC Market Research highlights that companies can lose up to 30% in revenue annually due to inefficiencies resulting from incorrect or siloed data. Frustrated business analysts and data scientists deal with these inefficiencies every day. Taking months to on-board new business analysts, difficulty in understanding and trusting data, and delays in business requests for changes to data are hidden costs; they can be difficult to understand, measure, and (more importantly) correct. Research from Crux shows that businesses underestimate their data pipeline costs by as much as 70%.

Data-in-motion is ingested into message queues, publish subscribe messaging (pub/sub), and event streaming platforms. Data integration points occur with data-in-motion in memory/data caches and dashboards that impact real-time decisioning and customer experiences. Data integration points also show up in databases. The quality of integration of data-in-motion and databases impact the quality of data integration in analytic platforms. The complexity at data integration points impacts the quality and speed of innovation for analytics, machine learning, and artificial intelligence across all lines of business.

DataStax

Standardize to optimize

To reduce the complexity at data integration points and improve the ability to make decisions in real time, the number of technologies that converge at these points must be reduced. This is accomplished by working with a multi-purpose data ingestion platform that can support message queuing, pub/sub, and event streaming. Working with a multi-model database that can support a wide range of use cases reduces data integration from a wide range of single purpose databases. Kubernetes is also becoming the standard for managing cloud-native applications. Working with cloud-native data ingestion platforms and databases enables Kubernetes to align applications, data pipelines, and databases.

As noted in the book Enterprise Architecture as Strategy: Creating a Strategy for Business Execution, “Standardize, to optimize, to create a compound effect across the business.” In other words, streamlining a data ecosystem reduces complexity and increases the speed of innovation with data.

Where organizations win with data

Complexity generated from disparate data technology platforms increases technical debt, making data consumers more dependent on centralized teams and specialized experts. Innovation with data occurs at data integration points. There’s been too much focus on selecting data platforms based on the technology specifications and mechanics for data ingestion and databases, versus standardizing on technologies that help drive business insights.

Data platforms and data architectures need to be designed from the onset with a heavy focus on building high-value, analytic data assets and driving revenue, as well as for the ability for these data assets to evolve as business requirements change. Data technologies need to reduce complexity to accelerate business insights. Organizations should focus on data integration points because that’s where they win with data. A successful real-time data cloud platform needs to streamline and standardize data flows and their integrations throughout the data ecosystem.

Learn more about DataStax here.

About George Trujillo:

George is principal data strategist at DataStax. Previously, he built high-performance teams for data-value driven initiatives at organizations including Charles Schwab, Overstock, and VMware. George works with CDOs and data executives on the continual evolution of real-time data strategies for their enterprise data ecosystem.

Data Management

Read More from This Article: How to Pinpoint Where Your Organization Wins (and Loses) with Data

Source: News