Large language models (LLMs) just keep getting better. In just about two years since OpenAI jolted the news cycle with the introduction of ChatGPT, we’ve already seen the launch and subsequent upgrades of dozens of competing models. From Llama3.1 to Gemini to Claude3.5 to GPT-o1, the list keeps growing, along with a legion of new tools and platforms used for developing and customizing these models for specific use cases.

In addition, we’ve seen the introduction of a wide variety of small language models (SLMs), industry-specific LLMs, and, most recently, agentic AI models. The sheer number of options and configurations, not to mention the costs associated with these underlying technologies, is multiplying so quickly that it’s creating some very real challenges for businesses that have been investing heavily to incorporate AI-powered capabilities into their workflows.

In fact, business spending on AI rose to $13.8 billion this year, up some 500% from last year as companies in every industry are shifting from experimentation to execution and embedding AI at the core of their business strategies. For companies that want to keep pace with each new advancement in a world that’s moving this fast, simply buying the latest, greatest, most powerful LLM will not address their needs.

Computing costs rising

Raw technology acquisition costs are just a small part of the equation as businesses move from proof of concept to enterprise AI integration. As many companies that have already adopted off-the-shelf GenAI models have found, getting these generic LLMs to work for highly specialized workflows requires a great deal of customization and integration of company-specific data.

Applying customization techniques like prompt engineering, retrieval augmented generation (RAG), and fine-tuning to LLMs involves massive data processing and engineering costs that can quickly spiral out of control depending on the level of specialization needed for a specific task. In 2023 alone, Gartner found companies that deployed AI spent between $300,000 and $2.9 million on inference, grounding, and data integration for just proof-of-concept AI projects. Those numbers are only growing as AI implementations get larger and more complex.

The rise of vertical AI

To address that issue, many enterprise AI applications have started to incorporate vertical AI models. These domain-specific LLMs, which are more focused, and tailor-made for specific industries and use cases, are helping to improve the level of precision and detail needed for certain specialized business functions. Spending on vertical AI has increased 12x, this year, as more businesses recognize the improvements in data processing costs and accuracy that can be achieved with specialized LLMs.

At EXL, we recently launched a specialized Insurance Large Language Model (LLM) leveraging NVIDIA AI Enterprise to handle the nuances of insurance claims in the automobile, bodily injury, workers’ compensation, and general liability segments. Our LLM was built on EXL’s 25 years of experience in the insurance industry and was trained on more than a decade of proprietary claims-related data. We developed the model to address the challenges many of our insurance customers were having trying to leverage off-the-shelf LLMs for highly specialized use cases.

Because those foundational LLMs do not include private insurance data or a domain-specific understanding of insurance business processes, our clients found they were spending too much time and money trying to customize them. Our EXL Insurance LLM is consistently achieving a 30% improvement in accuracy on insurance-related tasks over the top pre-trained models, such as GPT4, Claude, and Gemini. As a result, our clients are seeing enhanced productivity and faster claim resolution with lower indemnity costs and claims leakage – all-powerful value drivers.

Choreographing data, AI, and enterprise workflows

While vertical AI solves for the accuracy, speed, and cost-related challenges associated with large-scale GenAI implementation, it still does not solve for building an end-to-end workflow on its own.

To integrate AI into enterprise workflows, we must first do the foundation work to get our clients’ data estate optimized, structured, and migrated to the cloud. This data engineering step is critical because it sets up the formal process through which analytics tools will continue to be informed – even as the underlying models keep evolving over time. It requires the ability to break down silos between disparate data sets and keep data flowing in real-time.

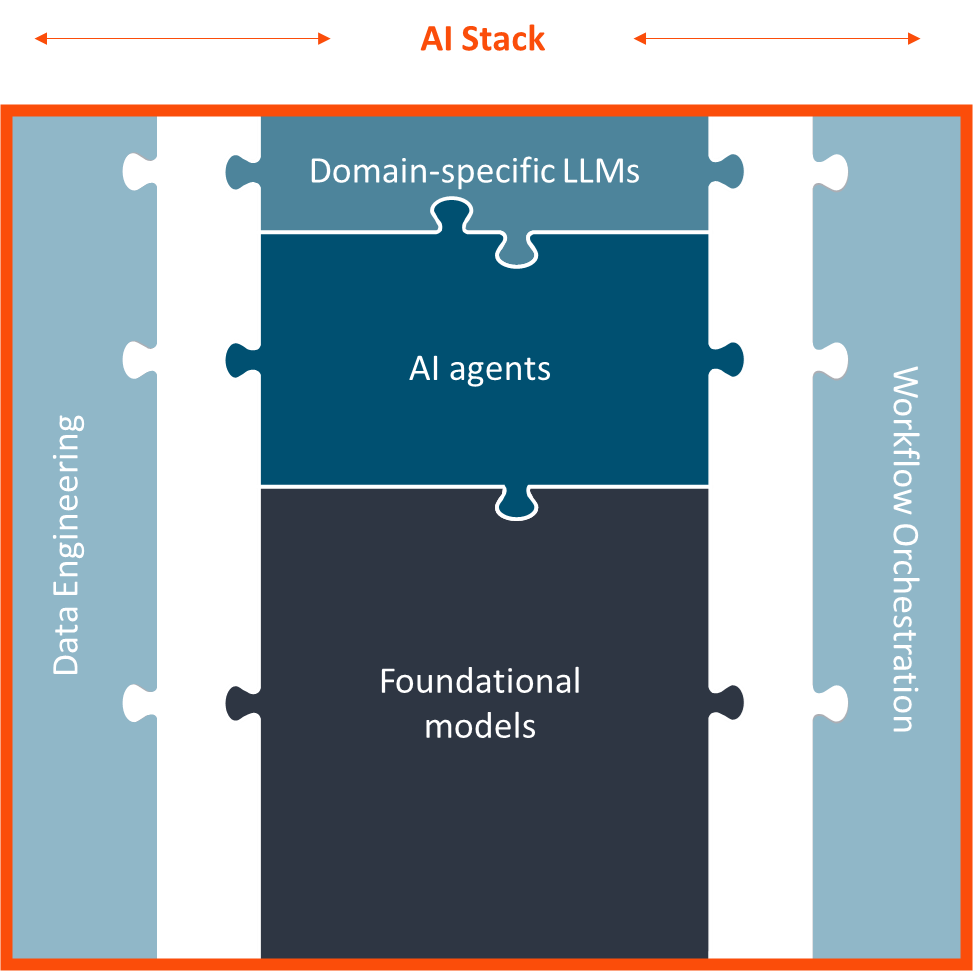

Once the data foundation is in place, it is important to then select and embed the best combination of AI models into the workflow to optimize for cost, latency, and accuracy. At EXL, our repertoire of AI models includes advanced pre-trained language models, domain-specific fine-tuned models, and intelligent AI agents suited for targeted tasks. These models are then integrated into workflows along with human-in-the-loop guardrails. This process not only requires technical expertise in designing the most effective AI architecture but also deep domain knowledge to provide context and increase the adoption to deliver superior business outcomes.

EXL

The goal here is to make AI integration feel completely seamless to end users. They should not be jumping in and out of different tools to access AI; the technology needs to meet them where they are in the existing applications they’re already using.

For example, EXL is currently working on a project with a multinational insurance company designed to improve underwriting speed and accuracy with AI. At its core, that process involves extracting key information about the individual customer, unstructured data from medical records and financial data and then analyzing that data to make an underwriting decision. We created a multi-agent solution where one agent used specialized LLMs for data extraction alongside our EXL Insurance LLM for data summarization and insight generation. Meanwhile, a separate AI agent used machine learning and analytics techniques to make underwriting and coverage decisions based on the outputs from the first model. So, by orchestrating, engineering, and optimizing the best combination of AI models, we were able to seamlessly embed AI into underwriting workflows without adding excessive data processing costs. Similarly, we orchestrated and engineered another multi-agent solution for a leading bank in the U.S. to autonomously address lost card calls.

Strategic AI orchestration is the real game-changer

To unlock real, sustainable business value from AI, businesses need to focus less on finding the silver bullet solution in a single LLM and instead prioritize finding the right balance between different types of models, each with its own specialized function, working together through various APIs, and the data modernization exercises needed to cost-effectively optimize specific workflows. In this way, developing an enterprise AI strategy has become a lot more like conducting an orchestra of highly specialized parts than a hunt for the ultimate killer app or quest for the perfect do-it-all model.

To learn more, visit us here.

About the author:

Rohit Kapoor is chairman and CEO of EXL, a leading data analytics and digital operations and solutions company.

Read More from This Article: How AI orchestration has become more important than the models themselves

Source: News