In 2025, insurers face a data deluge driven by expanding third-party integrations and partnerships. Many still rely on legacy platforms, such as on-premises warehouses or siloed data systems. These environments often consist of multiple disconnected systems, each managing distinct functions — policy administration, claims processing, billing and customer relationship management — all generating exponentially growing data as businesses scale. The financial and security implications are significant. Maintaining legacy systems can consume a substantial share of IT budgets — up to 70% according to some analyses — diverting resources that could otherwise be invested in innovation and digital transformation.

In my view, the issue goes beyond merely being a legacy system. I believe that the fundamental design principles behind these systems, being siloed, batch-focused, schema-rigid and often proprietary, are inherently misaligned with the demands of our modern, agile, data-centric and AI-enabled insurance industry. This disconnect creates ongoing friction that affects operational efficiency, inflates costs, weakens security and hampers our ability to innovate.

This is where Delta Lakehouse architecture truly shines. Specifically, within the insurance industry, where data is the lifeblood of innovation and operational effectiveness, embracing such a transformative approach is essential for staying agile, secure and competitive.

Approach

Sid Dixit

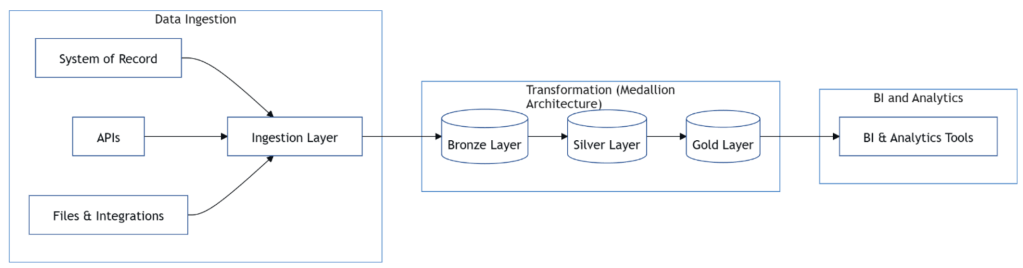

Implementing lakehouse architecture is a three-phase journey, with each stage demanding dedicated focus and independent treatment.

Step 1: Data ingestion

- Identify your data sources. First, list out all the insurance data sources. These include older systems (like underwriting, claims processing and billing) as well as newer streams (like telematics, IoT devices and external APIs).

- Collect your data in one place. Once you know where your data comes from, bring it all together into a central repository. This unified view makes it easier to manage and access your data.

Step 2: Transformation (using ELT and Medallion Architecture)

- Bronze layer: Keep it raw. Save your data exactly as you receive it. This raw backup is important if you ever need to trace back and verify the original input. The raw data can be streamed using a variety of methods, either batch or streaming (using a message broker such as Kafka). Platforms like Databricks offer built-in tools like autoloader to make this ingestion process seamless.

- Silver layer: Clean and standardize. Next, clean and organize the raw data. This step transforms it into a consistent format, making sure the data is reliable and ready for analysis. ACID transactions can be enforced in this layer.

- Gold layer: Create business insights. Finally, refine and aggregate the clean data into insights that directly support key insurance functions like underwriting, risk analysis and regulatory reporting.

Step 3: Data governance

- Maintain data quality. Enforce strict rules (schemas) to ensure all incoming data fits the expected format. This minimizes errors and keeps your data trustworthy.

- Ensure reliability. Use mechanisms like ACID transactions to guarantee that every data update is either fully completed or reliably reversed in case of an error. Features like time-travel allow you to review historical data for audits or compliance.

- Streamline processing: Build a system that supports both real-time updates and batch processing, ensuring smooth, agile operations across policy updates, claims and analytics.

Delta Lake: Fueling insurance AI

Centralizing data and creating a Delta Lakehouse architecture significantly enhances AI model training and performance, yielding more accurate insights and predictive capabilities. The time-travel functionality of the delta format enables AI systems to access historical data versions for training and testing purposes.

A critical consideration emerges regarding enterprise AI platform implementation. Modern AI models, particularly large language models, frequently require real-time data processing capabilities. The machine learning models would target and solve for one use case, but Gen AI has the capability to learn and address multiple use cases at scale. In this context, Delta Lake effectively manages these diverse data requirements, providing a unified data platform for enterprise GenAI initiatives. It addresses fundamental challenges in data quality, versioning and integration, facilitating the development and deployment of high-performance GenAI models.

This unification of data engineering, data science and business intelligence workflows contrasts sharply with traditional approaches that required cumbersome data movement between disparate systems (e.g., data lake for exploration, data warehouse for BI, separate ML platforms). Lakehouse creates a synergistic ecosystem, dramatically accelerating the path from raw data collection to deployed AI models generating tangible business value, such as reduced fraud losses, faster claims settlements, more accurate pricing and enhanced customer relationships.

Legacy out, Delta in

To sum up, an important strategic change for insurance businesses striving for long-term growth and operational excellence is transitioning from outdated, rigid legacy systems to contemporary, resilient lakehouse architecture supported by Delta Lake. This shift involves more than just implementing modern technology; it involves radically re-architecting the data foundation to realize its full potential.

Effectively utilizing artificial intelligence’s revolutionary power requires centralizing data, guaranteeing its dependability with features like ACID transactions and schema enforcement, and enabling unified processing of all data types. Successfully establishing this approach will put insurers in a strong position to innovate more quickly, run more smoothly, control risk more skillfully, and provide better customer service.

Sid Dixit, a seasoned principal architect with more than 15 years of expertise, has been at the forefront of IT modernization and digital transformation within the insurance sector. Specializing in cloud infrastructure, cybersecurity and enterprise architecture, he has successfully led large-scale cloud migrations and built AI-ready data engineering platforms, ensuring scalability and innovation are seamlessly aligned with organizational goals. Throughout his career, Sid has worked closely with C-level executives and organizations to adopt forward-thinking strategies that modernize their technology roadmaps. His initiatives have revolutionized operations, driving unparalleled efficiencies both within the insurance sector and his organization.

This article was made possible by our partnership with the IASA Chief Architect Forum. The CAF’s purpose is to test, challenge and support the art and science of Business Technology Architecture and its evolution over time as well as grow the influence and leadership of chief architects both inside and outside the profession. The CAF is a leadership community of the IASA, the leading non-profit professional association for business technology architects.

Read More from This Article: From legacy to lakehouse: Centralizing insurance data with Delta Lake

Source: News