AI’s transformative potential introduces technological ethical dilemmas like bias, fairness, transparency, accuracy/hallucinations, environment, accountability, liability and privacy. Likewise, behavioral ethical dilemmas such as automation bias, moral hazard, self-misrepresentation, academic deceit, malicious intent, social engineering and unethical content generation are typically out of the passive control of technology.

By proactively addressing both the technical and the behavioral ethical concerns, we can work toward a responsible, equitable and beneficial integration of AI tools into everyday solutions, products and human activities while mitigating regulatory fines and protecting the corporate brand, ensuring trust.

While AI technology advances at an enormous pace, and preparation for regulatory control of said technology races to keep up, guidance on the “what” and “why” of Ethics in AI abundantly exists. In “AI governance: Act now, thrive later,” author Stephen Kaufman provides prevailing guidance that, “Companies need to create and implement AI governance policies so that AI can deliver benefits to the organization and the customer, to provide a fair, safe and inclusive system that is trusted by the users.”

These internal policies ultimately must align to regulations and serve to provide evidence of compliance with governance across an organization’s total consumer footprint.

Let’s focus on providing practical guidance for senior business and technology leaders on the “how” by prioritizing and enabling responsible AI development through architecture and engineering design that is responsive (as opposed to reactive) to the continuous and fast-changing laws, policies and practices as they emerge.

The challenge

As laws and regulations around AI emerge, we are seeing they are often bifurcated between regional and international interpretation. Building corporate governance as a base for regulatory adherence can be complicated by these differences.

Let’s examine the current landscape of emerging global compliance efforts to fully understand their alignments and differences. While they generally align on principles like transparency, accountability and fairness, significant regional differences remain in enforcement mechanisms, the role of government oversight and the balance between innovation and regulation:

| Proposed | Alignment with other frameworks | Key differences |

|---|---|---|

| NIST AI Risk Management Framework (RMF) (USA) | Emphasizes risk management, transparency, and governance, aligning with EU AI Act’s focus on accountability and the ICO’s transparency principles. It promotes a multidisciplinary approach similar to OECD’s inclusiveness principles. | The NIST framework is voluntary, providing guidelines rather than binding regulations, contrasting with the EU’s legally enforceable AI Act and China’s stringent regulatory approach. It also focuses heavily on technical standards and cross-sectoral applications. |

| EU AI Act | Aligns with global efforts on transparency, accountability, and risk categorization, similar to NIST RMF and Canada’s Bill C-27. It also shares a human rights-based approach seen in OECD’s guidelines. | The EU AI Act introduces a strict legal framework with a detailed classification of AI risks and mandatory requirements for high-risk systems, which is more prescriptive than NIST’s voluntary framework or the UK’s principles-based approach. |

| Canada’s Bill C-27 | Aligns with EU AI Act in regulating high-risk AI systems and enforcing accountability measures. Shares NIST RMF’s focus on mitigating biased outputs and promoting public transparency. | Bill C-27 uniquely integrates AI governance within broader privacy and data protection laws, emphasizing personal data use in AI development, which is more specific than the general frameworks seen in NIST and OECD. |

| UK ICO’s Strategic Approach | Emphasizes data protection, fairness, and accountability, closely aligning with EU GDPR and the AI Act, as well as NIST’s governance focus. Transparency and explainability are also shared principles with NIST and OECD. | The UK approach is less regulatory and more guidance-oriented, focusing on existing data protection laws rather than new AI-specific regulations, differing from the more prescriptive EU and Canadian models. |

| OECD Initial Policy Considerations for Generative AI | Shares common principles of transparency, fairness, and bias mitigation with NIST, EU AI Act, and the UK ICO. Promotes international cooperation similar to NIST’s global standardization efforts. | The OECD’s approach is more high-level and advisory, focusing on fostering international dialogue and best practices rather than specific legal or regulatory frameworks. It also uniquely highlights the socio-economic impacts of generative AI. |

| China’s Interim Measures for Generative AI Services | Aligns with global emphasis on transparency, content moderation, and data governance, similar to the EU AI Act and OECD principles. It also focuses on algorithmic accountability akin to the UK ICO’s guidelines. | China’s measures are more restrictive, with heavy state oversight and content control, emphasizing political alignment and national security, which is stricter than Western frameworks emphasizing individual rights and corporate responsibility. |

| Bipartisan AI Task Force Report (USA) | Aligns with NIST RMF in promoting safe and accountable AI development. Emphasizes governance and risk management similar to the EU AI Act and Canada’s Bill C-27. | Focuses on government use of AI, ensuring public transparency and accountability in federal AI applications, which is more specific than the broader, cross-sectoral approaches of other frameworks. |

Regulations and laws are often volatile as political influences can upend them on a moment’s notice:

- The USA Executive Order (EO) on Safe, Secure and Trustworthy Artificial Intelligence (14110) issued on October 30, 2023, was rescinded on January 20, 2025.

- The International AI Declaration, formalized during the Artificial Intelligence Action Summit held in Paris on February 10-11, 2025, is a collaborative commitment by over 60 nations to promote the responsible development and deployment of artificial intelligence. Notably, the United States and the United Kingdom chose not to sign the declaration.

This volatility extends beyond regulatory compliance. The realm of public/social perception and influence, while not formally enforceable by law, often equally influences corporations to respond to the ebbs and flows of group-think and crowd-sourced opinions.

Throw into this mix the fact that the advancement of AI platforms is still early in maturity and is continuously evolving at an accelerated pace to match hype expectations, it becomes obvious that hard-coding a particular a specific platform or regulation risks potential lock-in to policies and technologies that are subject to change on a moment’s notice.

For example, an organization reactively incorporating the latest cool LLM (like DeepSeek) due to consumer pressure might find they must abandon it when a new regulation or executive order emerges negating its use.

How should your organization take control over that which it has no control?

Taking control

The architecture discipline will always need to continuously evaluate the landscape of emerging compliance directions to synthesize how the overall definition and intent can be translated into actionable architecture and design that best enables compliance. Parallel to this is to ensure their implementations are auditable so that governing bodies can clearly see that regulatory mandates are being met.

When applied, various capabilities will enable the necessary flexible designs and architectures with supporting patterns for sustainable agility to ensure the various checks and policies are being enforced.

Capabilities that promote greater AI agility

| Capability | Explanations & Patterns | Purpose |

|---|---|---|

| Configurable & Replaceable | Modular and Extensible Architecture – Isolate core functionality from optional or configurable features to make it easier to add, update or remove components without impacting the entire system.

Externalized and Centralized Configuration – manage environment-specific settings, feature toggles and runtime behavior changes. Dependency Injection and Inversion of Control (IoC) |

Design your system so that changes in behavior or features can be made without modifying the core code. |

| Transparency & Explainability | Model-Agnostic Explanation Service – an explanation service is called immediately after a prediction is made to generate a human-readable explanation that can be surfaced via dashboards or logs.

Interpretable Surrogate Model – train a simpler, interpretable model to approximate the predictions of the complex model. Decision Audit Trail – a comprehensive logging strategy that records key data points (inputs, outputs, model version, explanation metadata, etc.) associated with every decision made by the AI system. |

Articulate how an AI system makes decisions, including detailing the logic, data influences and model behavior. |

| Fairness & Bias Mitigation | Pre-Processing Bias Mitigation – ensure input data is as fair and representative before training by data cleaning, re-sampling, re-weighting or augmenting.

In-Processing Fairness Constraint – strategies that incorporate fairness considerations directly into the model training process. Post-Processing Outcome Adjustment – adjust model outputs after training to mitigate biases and promote fairness. |

Identify, measure and mitigate biases that exist in data, model design or outputs. |

| Accountability, Auditability & Governance | Comprehensive Audit Trail – ensure transparency, accountability and compliance of AI systems through systematically recording all significant activities within the AI lifecycle.

Model Registry and Versioning – centralized repository that tracks all models, including versions, training data snapshots, hyperparameters, performance metrics and deployment status. Governance as Code – governance policies, ethical guidelines, and compliance checks are codified and automated as part of the CI/CD and model deployment pipeline. |

Policies, processes, and oversight mechanisms that assign clear responsibilities, manage risk and provide structure to auditing AI systems throughout their lifecycle. |

Staying innovative, sensibly

The heavy hand of governance can be a cause for diminished innovation, however, this doesn’t need to happen. The same capabilities and patterns used to ensure ethical behaviors and compliance can also be applied to stimulate sensible innovation.

As new LLMS, models, agents, etc. emerge, flexible/agile architecture and best practices in responsive engineering can provide the ability to infuse new market entries into a given product, service or offering. Leveraging feature toggles and threshold logic will provide safe inclusion of emerging technologies.

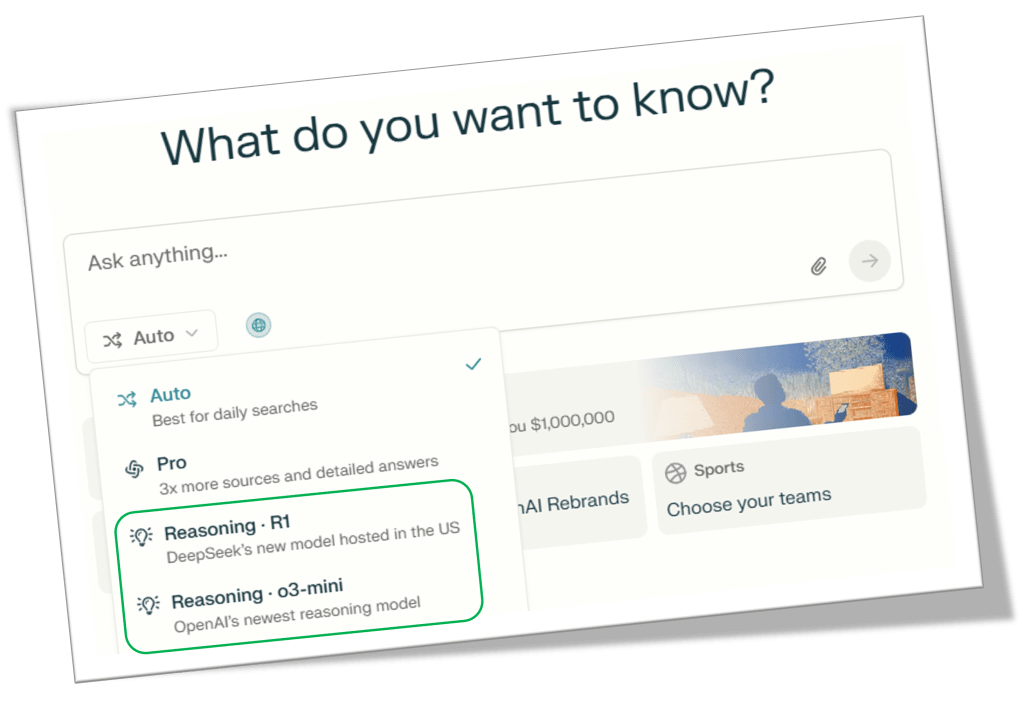

Evidence of this practice exists in various platforms and tool/product vendors’ agile responsiveness to emerging models. The diagram below shows how Perplexity has already incorporated both DeepSeek and OpenAI’s latest models shortly after they became available.

Jim Wilt

It is important to note that while vendors provide early access to emerging technologies, they do not ensure or enforce any form of ethical monitoring or governance in their use. That is ultimately the consumer’s responsibility.

As enterprises incorporates more flexibility regarding the use of emerging technologies, they must do this sensibly and safely. The article “From risk to reward: Mastering the art of adopting emerging technologies” provides a simple approach to incorporating new technologies by leveraging three phases.

- Learning – expose the tech internally only, use only public data (ungoverned) and monitor results.

- Growing – after learning what the tech offers and exposes, start using private data and govern it actively while exposing it only internally. Monitor results.

- Landing – only once you ensure your governance is robust internally, open access to it externally and monitor results.

A good example of this is how OpenAI rolls out its models where different features are enabled/disabled based on their readiness.

Jim Wilt

Responsive designs and flexible architectures will provide the platform for the enigma of fast-changing features and characteristics. Whether it’s rapid change in technology or in response to emerging regulations, your technology practices should always be ready. The faster your teams respond, the greater you earn their trust.

Trustworthiness and complexity

It might seem rather strange – you cannot (ever) rely on your tools/platforms to be compliant, but you must ensure your products (internal and external) are. When you think deeper about it, however, it makes sense. If your tools implement ethical policies and regulatory compliance differently than your circumstances dictate, you’ll expend more time working to reconfigure their behaviors to align to your needs.

Rather, let your systems empower you to communicate your commitment:

- Your responsiveness implies that you intend to comply with respect to the urgency of changed policy/regulations.

- Internal discovery and mastery of emerging technologies “hidden” from consumers promotes high confidence when those features and capabilities are made available to all.

- The ability to turn features on/off, by thresholds and by regions in a highly configurable way allows your solutions to adapt quickly to each and every situation and environment.

While managing compliance through agile solution designs and architectures promotes a trustworthy customer experience, it does come with a cost of greater complexity. This means your talent needs to be mastered in the patterns and practices like those shared above. Your engineering resources are not only key to establishing trust with your consumers, their efforts will also establish greater trust with your governing and executive boards.

When you factor in governance boards, regulatory compliance, audits, responsiveness and solution agility, it all adds to the complexity.

It’s not enough to address a single or subset of these facets. They all require mindful attention that ensures each component can be adequately addressed using proven patterns, practices and empowered teams.

Next steps

Who would have thought that responsible AI development ultimately gets down to the same solid engineering, architecture and rigor we should already be employing? The big difference, here, is the stakes for falling short in AI development could cost you the farm!

Every change management framework from Kotter to ADKAR begins with creating a sense of urgency or awareness. What better way to create, or greater establish, agile engineering and architecture than in response to the demands AI is currently placing on the enterprise? You can leverage this current climate to accelerate your adoption or further your practices to ensure your agility comes from a stance of being proactively responsive!

Vipin Jain, founder and chief architect of Transformation Enablers Inc., brings over 30 years of experience crafting execution-ready IT strategies and transformation roadmaps aligned with business goals while leveraging emerging technologies like AI. He has held executive roles at AIG, Merrill Lynch and Citicorp, where he led business and IT portfolio transformations. Vipin also led consulting practices at Accenture, Microsoft and HPE, advising Fortune 100 firms and U.S. federal agencies. He is currently a senior advisor at WVE.

Jim Wilt, an accomplished innovator with 40 years of experience in technology from aerospace to cloud and operating systems to health and financial organizations, is a board-certified distinguished chief architect at WVE. He has been contributing to the architecture profession worldwide for 30 years through the Microsoft Architecture Advisory Board (MAAB), Microsoft Certified Architect (MCA), as an Open Group Digital Practitioner and through the Iasa CITA-P/D and BTABOK. His passion for the advancement of bleeding-edge technologies has him leading genAI augmented software and platform modernization/digital transformation at Fortune 100 organizations today.

This article was made possible by our partnership with the IASA Chief Architect Forum. The CAF’s purpose is to test, challenge and support the art and science of Business Technology Architecture and its evolution over time as well as grow the influence and leadership of chief architects both inside and outside the profession. The CAF is a leadership community of the IASA, the leading non-profit professional association for business technology architects.

Read More from This Article: Ethics in action: Building trust through responsible AI development

Source: News