High performance computing (HPC) and Artificial Intelligence (AI) solutions are being deployed on-premises, in the cloud, and at the edge across a wide range of industries. According to a study by Grand View Research, the global HPC market is expected to reach $60 billion by 2025.¹

- In manufacturing, HPC and AI are being used for predictive and prescriptive maintenance, automation of product lifecycle management, and short design cycles.

- In healthcare and life sciences, they’re being used to accelerate drug discovery and develop more personalized healthcare.

- The weather and climate research industry employ these solutions for timely and more precise weather forecasting and better understanding of climate change.

- The energy, oil, and gas sector uses these solutions for developing real-time wind and solar maps for energy optimization and improved photovoltaic efficiency.

- HPC and AI transformed the financial services industry via applications across many areas, including stocks and securities modeling, fraud detection, credit risk assessment, customer engagement, cyber security, and regulatory compliance.

But are the power demands of HPC in conflict with organizational sustainability goals?

At the same time there is an increased scrutiny and pressure from external stakeholders (shareholder groups, consumers, electoral groups) to improve sustainability and reduce carbon footprint. Many businesses need to demonstrate improvements in environmental sustainability, but they need to accomplish this goal in a way that maximizes business benefits.

Recently, data center operators have recognized the challenges associated with the ever-growing rack power density and that the demand for HPC and storage cannot be underestimated. The questions that come to mind are: is there a conflict between the need for HPC and corporate social responsibility? How does HPC impact an organization’s carbon emissions?

Perspective on data center energy and water use

On a square foot basis, a modern high-availability enterprise data center will consume 30-100 times the electricity versus a typical commercial office building. An HPC data center intensity is even higher. Data centers are considered one of the big electricity users, with various sources estimating data centers consume as much as 3% of the world’s electricity².

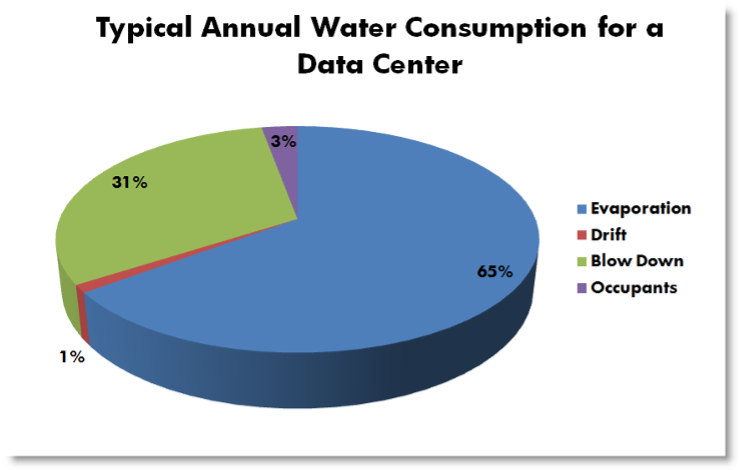

Water consumption also enters the equation (depending on the type of cooling system) and is used at much higher rates than a typical commercial office building. Generally, data centers consume relatively little water directly compared to the water it takes to generate electricity to power them, a fact that should also be taken into consideration. If both air-cooled and liquid-cooled HPC facilities employ a similar water-cooled chiller plant, then site water consumption (e.g., cooling towers) is expected to be similar between air-cooled and liquid-cooled IT. It should be mentioned, due to higher temperature set points, that liquid-cooled data centers can employ other types of cooling and heat rejection systems (e.g., dry coolers) that can eliminate water use altogether. Figure 1 presents a typical data center on-site water consumption breakdown.

A lot more water is used to generate energy (source) that powers data centers than to cool them (site). Taking the USA as a benchmark, it takes about 7.6 litres of water on average to generate 1kWh of energy, while an average data center uses 1.8 litters of water for every kWh it consumes. This means that a 3 MW data center with a small office component could consume over 11 million gallons per year of potable water.

Due to compelling sustainability regulatory and compliance initiatives that need to be addressed, data center operators are working hard to drive down energy consumption to advance sustainability in their facilities. One way to do that is by adopting HPC solutions.

Can HPC reduce operational carbon?

Operational carbon is generally considered the biggest contributor to total global emissions. It has long been regulated in many countries while others are taking steps through sustainability initiatives to address the issue. HPC can be more energy and resource efficient than a traditional approach. How? HPC cooling solutions include precise airflow management or use water as a cooling medium and can also be in direct contact with the heat source (CPUs, GPUs, memory, etc.), known as Direct Liquid Cooling (DLC).

One apparent benefit of liquid cooling is the increased performance from CPUs/GPUs and the overall system due to lower latency of higher-density systems. Liquid cooling can easily cool up to 200 kW per rack. DLC results in total IT energy savings of about 10% from just eliminating the servers’ fans used in traditional air cooling. Additionally, most if not all the traditional data hall cooling units and associated fan power is also eliminated. This significantly reduces energy use and costs as well as capital equipment investment.

One big reason is the ability to use higher air or water temperatures to cool the equipment; in most climates this can eliminate mechanical cooling at least for most of the year. The colder the air or water required, the higher the energy. Raising the supply chiller temperature from a traditional 55⁰F (13⁰C) to 65⁰F (18⁰C) can reduce the required energy for cooling from 20 to 40%. Knowing that the energy required for cooling a data center can be more than 25% of the total, this equates to a potential energy savings of up to 30% of the total energy required for non-IT equipment.

As stated previously, because HPC infrastructure accepts higher water temperatures, the use of closed-loop liquid cooling, coupled with dry coolers, can also reduce water consumption substantially.

When considering HPC liquid cooling, waste heat recovery from return cooling water presents an opportunity to save energy, reduce heating demand and costs in the facility, and impact total carbon footprint. In many cases, the return water temperatures from liquid cooled racks may exceed 40⁰C; this water can be used to supplement building heating systems and offset some of the energy that building boilers may produce to meet the heating demand from offices, kitchens, and other domestic use. Depending on the system type and the heat reuse application, it is possible to recover 50-100% of the heat. This is especially cost effective in colder climates, where heat recovery can considerably offset the purchase cost and environmental impact of burning fossil fuels for heat and hot water in adjacent buildings.

What about the embodied carbon?

Many of the global green initiatives to date have addressed the operational aspects of data center sustainability. However, has enough thought been given to the embodied energy of the facility and space construction itself? Embodied carbon is the total greenhouse gas (GHG) emissions resulting in the supply chain prior to building construction from mining through manufacturing, transportation, and installation of building materials. It is a major source of carbon globally. Unlike operational carbon, embodied carbon emissions can’t be reduced later since they have already been emitted. Data centers use huge amounts of concrete and steel, which are major sources of CO2, and as the sustainability gains from operational efficiencies dry up, tapping embodied carbon in the construction phase will help achieve carbon neutrality.

Hence, another benefit of HPC is its ability to reduce the embodied emissions due to space reduction and less cooling infrastructure needed, especially when building a new data center which also reduces facility capital costs. The savings come primarily from the building shell and interior build-out of the data center. All else being equal, going from 200 watts per square foot to 1,000 watts per square foot or higher and reducing the raised floor area from 10,000 square feet to 2,000 square feet will reduce the first cost of the facility by approximately 10-20%. A typical cost for a facility of this size with concurrently maintainable power and cooling systems would be a minimum of $20M. A 10-20% reduction equates to $2M-$4M.

In conclusion, it is easy to assume that HPC conflicts with sustainability goals, but quite the reverse is the case. HPC improves the energy efficiency of modern supercomputers via novel approaches for driving optimal performance per watt. HPC can address and provide real sustainable solutions for some of the most pressing societal issues.

The HPC approach combines energy efficiency that delivers optimum levels of power, storage, and connectivity and resource efficiency. Holistic and sustainable HPC data center design should result in the least amount of infrastructure for power conversion, cooling, space, and resiliency, reducing both the operational and embodied carbon.

To find out more about Digital Next Advisory or engaging with an HPE Digital Advisor please contact us at [email protected] or visit www.hpe.com/digitaltransformation.

¹ https://www.grandviewresearch.com/industry-analysis/high-performance-computing-market

____________________________________

About Munther Salim

Dr. Munther Salim is a Distinguished Technologist, HPE Pointnext Services consulting. His focus includes data center green design, energy efficiency and sustainability, and data center facility operations consulting. He is an active participant and author in many industry organizations such as the Green Grid, 7×24 exchange, and ASHRAE. He has also advised a number of governments and governmental advisory bodies as they determine their data center energy efficiency strategies in the context of global targets.

Read More from This Article: The Convergence of High-Performance Compute and Sustainability

Source: News